Authentication Protocols, Web UX and Web API

The back to basics post about token validation published few weeks ago was overwhelmingly well received – hence, always the data driven kind – here I am jolting down the logical next step: an overview of authentication protocols.

You heard the names: SAML, OAuth 1 and 2, WS-Federation, Kerberos, WS-Trust, OpenID and OpenID Connect, and various others. You probably already have a good intuitive grasp of what those are and what they are for, but more often than not you don’t know how those really work. Usually you don’t need to, which is why you can happily skip this post and still be successful taking advantage of those in your solutions. However, as stated in the former back to basics post, sometime it is useful to pry Maya’s veil open and get a feeling of the prosaic, unglamorous inner workings of those technologies. It might rob you of some of your innocence, but that knowledge will also come immensely handy if you find yourself having to troubleshoot some stubborn auth issue.

Also, a word of caution here. This is one of my proverbial posts written during an intercontinental flight. Nobody is paying me to write it, I am doing it mostly for passing the time, hence it might end up being more verbose and ornate than some of you might like. Will all due respect, this is my personal blog, I am writing in my personal time off, this page is being served to your browser from bandwidth I pay out of pocket. If you don’t like verbose and ornate, well… ![]()

Overview

In a nutshell: an authentication protocol is a choreography of sort where a protected resource, a requestor and possibly an identity provider exchange messages of a well defined format, in a well defined sequence – to the purpose of allowing a qualified requestor to access the protected resource.

The type of resource, and the mode with which the requestor attempts to access it, are the main factor influencing which shape and sequence of the messages are suitable for addressing each scenario.

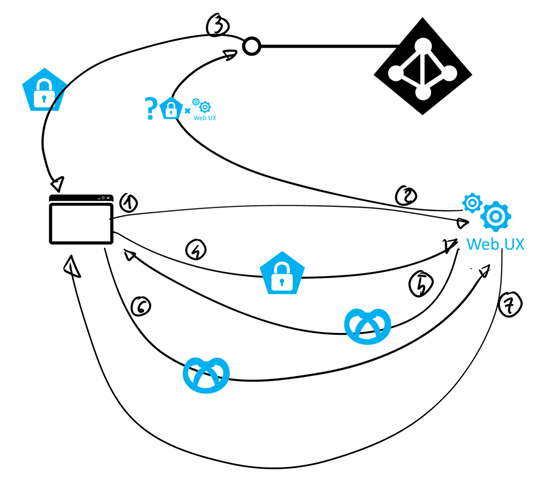

The canonical taxonomy defines two broad protocol categories: passive protocols (meant to be used by web apps which render their UX through a browser) and active protocols (meant to address scenarios in which a resource is consumed by native apps, or apps with no UX at all).

Today’s app landscape has to some degree outgrown that taxonomy, as the two categories exchanged genetic material and traits from one contaminated the other: HML5 and JavaScript can make browsers behave like native apps, and native apps can occasionally whip out a windowed browser for a quick dip in passive flows. Despite of those developments, like a Newtonian physics of sort the canonical taxonomy remains of interest – both for its didactic potential (it’s a great introduction) and for its concrete predictive power (for now, most apps are still accurately described by it). Hence, in the remainder of this post I am going to unfold its details for you – and reserve the discussion of finer points to some other future posts.

Web UX and Passive Protocols

Open a browser in private mode, and navigate to the Microsoft Azure portal. You’ll be bounced to authenticate against AAD, either via its cloud based credential gathering pages or via your ADFS if your tenant is federated. Upon successful authentication, you’ll finally see the Azure portal take shape in your browser. What you just experienced is an example of a passive authentication flow.

Passive protocols are designed to protect applications that are consumed via web browser; web “pages”, if you will. The protocol takes its “passive” modifier from the nature of its main mean of interaction, the web browser.

Truly, the web browser has no “will” of its own. Think about it: you kickstarted the process by typing a web address and hitting enter, and what followed was 100% determined by what the server replied to that initial GET. The browser passively (get it? ![]() ) interpreted whatever HTTP codes came back, doing the server’s bidding with (nearly, see below) no contribution of its own.

) interpreted whatever HTTP codes came back, doing the server’s bidding with (nearly, see below) no contribution of its own.

SAML-P, classic OpenID and OpenID Connect and WS-Federation are all examples of passive protocols, conceived to operate by leveraging the aforementioned browser behavior. They emerged at different historical moments for addressing different variations of the common “web pages app” theme, all employ wildly different syntaxes, but if you squint (sometimes a little, sometimes a lot) they all roughly conform to the same high level patterns.

Most passive protocols are concerned about two main tasks:

- Enforce that a request includes valid authentication material

- Failing that, challenge unauthenticated requestors to authenticate

Note: for the sake of simplicity, today I’ll ignore session management considerations like sign out and its various degrees/stages.

Let’s consider the two tasks in reverse order.

Sign in

Put yourself in the “shoes” of the Azure portal during the flow described earlier. You are sitting there all nice and merry, and suddenly from the thick fog one hand emerges, handing you a post-it that says something to the effect of “GET /”. You don’t see who’s handing you the note, and there’s nothing else you can use to infer the identity of the requestor. You need to know, because 1) you’ll serve only requests from legitimate customers and 2) the content of the HTML you’d return for “/” (services, sites, etc) depends on the caller’s identity!

You have two possible course of action here. You could simply state that the caller is unauthorized by sending back a 401, but that would not get the user very far: the browser would render the content, presumably a scary looking error, and there would be no recover from it.

The other possibility is what is typically done by passive protocols: instead of simply asserting that the caller is unauthenticated, you can proactively start the process of making the necessary authentication take place. In the Azure portal case, we know that we need users to authenticate with Azure AD; hence, we can send off the caller to Azure AD with a note detailing what we want Azure AD to do – authenticate the caller and send it back to us once that’s done.

That is the first protocol artifact we encounter: the sign in request. Every protocol flavor has its own message format and transport technique, but the main common traits are:

- it’s almost always implemented as an HTTP 302 toward the URL of an endpoint of the identity provider (IP, the entity that plays the role that Azure AD played in our sample scenario)

- The message typically contains:

- Some construct indicating that the message is a sign in request

- An identifier which unambiguously indicates to the IP the identity of the application the requestor is attempting to access

- Occasionally, the return address to which the browser should be redirected to once the sign in operation took place

Some protocols can omit the return address because the IP might already know what addresses should be used for each application it knows about, and the app involved in the request is unambiguously indicated by its identifier.

In fact, for the above to work the IP usually requires to have pre-existing knowledge of the app requesting the sign in. This stems from both security reasons (e.g. you don’t want your users to authenticate with apps you don’t know about, as this might lead to unintended disclosure of info) and practical ones (e.g. the IP needs to know what info are required for all the apps it knows about).

Want to see a couple of examples? Here they are. The constructs in the messages follow the same color coding as the abstract concepts they implement as introduced above.

Here there’s a sign in message in OpenId Connect:

You can see it contains the endpoint of the IP, the identifier of the app (the client_id) and an indication of the type of transaction we want to perform. This specific example does not feature a return address, but that’s simply a function of what I could fish out from what Fiddler traces I have on my local disk (as I write those words we are flying over Iceland).

Here there’s a WS-Federation sign in message.

HTTP/1.1 302 Found

Location: https://login.windows.net/9b94b3a8-54a6-412b-b86e-3808cb997309/wsFederation?wa=wsignin1.0&

wtrealm=https%3a%2f%2flogin.windows.net%2f

&wreply=https%3a%2f%2flocalhost%2f

&wctx=3wEBD09BdXRoMk[.snip.]7Q2&wp=MBI_FED_SSL&id=

Here we see all elements.

On a side note: You have to marvel at the power of evolution ![]() here there are two different protocols, born in different times from (mostly) different people and yet, the “body plan” is remarkably similar. But I digress.

here there are two different protocols, born in different times from (mostly) different people and yet, the “body plan” is remarkably similar. But I digress.

What happens next – as in what HTML is served back once the browser honors the 302 and hits the specified IP endpoint with the message – is up to the IP. In the Azure AD case, there’s sophisticated logic determining whether the user should enter credentials right away or be redirected to a local ADFS, whether the look & feel of the page should be generic or personalized for a specifc tenant, whether the user gets away with just typing username and password or if a 2nd authentication factor is required… and this is just the behavior of one provider, in this point in time. Protocols typically do not enter in the details of what an IP should do to perform authentication. However, they do prescribe how the outcome of a successful authentication operation should be represented and communicated back to the app.

From the other back to basics post you are already familiar with the use of security tokens for representing a successful authentication. It is not the only method, but we’ll focus on it today.

Different protocols mandate different requirements on the types of tokens they admit: SAML only works with SAML tokens, OpenID Connect only uses JWTs as id_tokens, WS-Federation works with any format as long as it can reference it, and so on.

Another big difference between protocols lies in the method used to send the tokens back to the application. We’ll take a look at that next.

Request Validation

The principal aspects of this phase regulated by each protocols’ specs are what kind of tokens are sent, where in the message they are placed, which supporting info are required and which format such a message should generally assume.

The outcome of a successful sign in operation with the IP is a message that travels back to the application, carrying some proof that the authentication took place (e.g. the token) and some supporting material that the app can use to validate it.

That can be accomplished in a number of way: once again with a a plain 302, or with some more elaborate mechanism such as a page with some javascript designed to auto-post to the app a form containing the token. WS-Federation does the latter; OpenID Connect offers to the app a choice between numerous methods, including the autopost form. Here there’s an example:

HTTP/1.1 200 OK [.snip.]

Content-Length: 2334 <html><head><title>Working...</title></head><body>

<form method="POST" name="hiddenform"

action="https://localhost/">

<input type="hidden" name="code" value="AwABAA[.snip.]USF2uByAA" />

<input type="hidden" name="id_token" value="eyJ0eXA[.snip.]-odog" />

<input type="hidden" name="state" value="OpenIdConnect.AuthenticationProperties=xDn9ksWT

[.snip.]sPUxKFJL7Q" />

<input type="hidden" name="session_state" value="1dc37d42-cc43-4d82-93d5-521feb8fb27e" />

<noscript>

<p>Script is disabled. Click Submit to continue.</p>

<input type="submit" value="Submit" />

</noscript>

</form>

<script language="javascript">

window.setTimeout('document.forms[0].submit()', 0);

</script>

</body></html>

As you can see, the content is a form with the requested token and some javascript to POST it back to the application. The app is responsible for accepting the form, locating the token in it and validating it as discussed here. A valid token is what the app was after when it triggered all this brouhaha, hence getting it basically calls for “mission accomplished” (but don’t go home yet, read the next section first).

Bonus Track: Application Session

For what protocols are usually concerned, delivering the token to the app concluded the transaction. However, as you might imagine dancing that dance for every single GET your app UX requires would be jolly impractical. That’s why in order to understand how the authentication flow actually works we need to go a bit beyond the de jure aspect of the protocols and and look at the de facto of the common implementations.

Upon successful validation of an incoming token via passive protocol, it is typical for a web app to emit a session cookie – which testifies that the validation successfully took place, without having to repeat it (along with token acquisition) at every subsequent request. in fact, such a session cookie will be included by the browser at every subsequent request to the app’s domain: at that point, the app just needs to verify that the cookie is present and is valid. As there is no standard describing how such cookies should look like, it is up to the app (or better, to the protocol stack the app relies on) to define what “valid” means. It is customary to protect the cookie against tampering by signing and encrypting it, to assign to it an expiration instant (often derived from the expiration of the token it has been derived from) and so on. As long as the cookie is present and valid, a session with the app is in place. One implication of this is that such cookie needs to be disposed of when a user (or other means) triggers a sign out. Also note, this cookie is separate and distinct from whatever cookie the IP might have itself produced for its own domain after authenticating the user – but the two are not independent. For example: if a sign out operation would clear only the app cookie but leave the IP cookie undisturbed it would not really disconnect the user, given that the first request to the app would be redirected to the IP and the presence o the IP cookie would automatically sign back in the user without requiring any credential entering. But as I mentioned earlier, I don’t want to dig in sign out today hence I won’t go in the details.

This would also be a good place for starting a digression on how cookies aren’t well apt to be used with AJAX calls, and how modern scenarios like SPA apps might be better off tracking sessions by directly saving tokens and attaching them to requests, but this post is already fantastically long (now we are flying over Greenland, almost cleared it in fact) hence we’ll postpone to another day.

Well, if you managed to read all the way to here – congratulations! Now you know more about how passive protocols work, and why they deserve their moniker. Let’s shift Sauron’s eye to their (mostly) nimbler siblings, the active protocols.

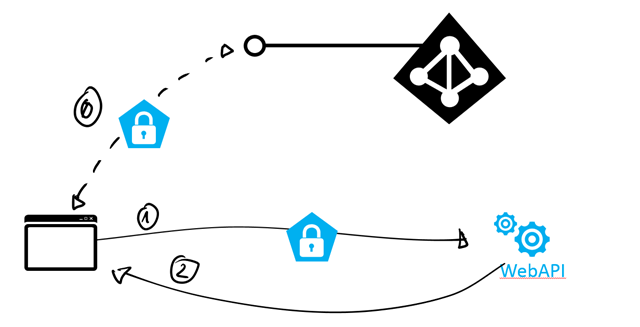

Web API and Active Protocols

Whereas a classic web app packs in a single bundle both the logic for handling the user experience and the server side processing, using the browser as a puppet for enacting its narrative, in the case of a web API (and all web services in general) the separation of concerns is more crisp. The consumer of the web API – being it a native app, the code-behind of a server side process or whatever else – acts according to its own logic and presents its own UX when and if necessary: the act of calling the web API is triggered by its own code, rather than as a reaction to a server directive. The web API serves back a representation of the requested resource, and what the requesting app does with it (processed? Visualized? Stored?) is really none of the web API’s business.

This radically different consumption model influenced the way in which authentication considerations latch to it. For starters, every request to a resource is modeled as an independent event, rather than part of a sequence: no cookies here. If a requestor does not present the necessary authentication material along with a request, it will simply get a 401: there won’t be automatic redirects to the IP because that’s simply incompatible with the model. If your code is performing a GET for a resource, it expects a representation of that resource or an error: getting a 302 would make no sense, as there’s no generic HTTP/HTML processor to act on it.

A requestor is expected to have its own logic for acquiring the necessary authentication material (e.g. a token) before it can perform a successful request to a protected web API. The task of acquiring tokens and the task of using them to access resources are regulated by pairwise-related – but nonetheless different & distinct – protocols. In the WS-* world, token acquisition was regulated by WS-Trust and resource access was secured according to WS-Security. In today’s RESTful landscape, token acquisition is mostly done through one of the many OAuth2 grants while resource access is done according to OAuth2 bearer token usage spec and its semi-proprietary variants.

Today I’ll gloss over the token acquisition part (after all, you can always rely on ADAL to do the heavy lifting for you ![]() ) and concentrate on securing access to resources, mostly to highlight the differences with the passive flows.

) and concentrate on securing access to resources, mostly to highlight the differences with the passive flows.

It is tempting to deconstruct active calls as a passive flow where the token acquisition took place out of band and there’s no session establishment – every call needs to pack the original token. That is in the right direction but not quite correct: the further difference is that whereas in passive flows the token is sent via a dedicated message with explicit sign in semantic, active flows are designed to augment resource requests with tokens.

In term of syntax, I am sure you are familiar with how OAuth2 adds tokens to resource requests: Authorization header and query parameter are the most common token vessels in my experience. WS-Security is substantially more complicated, and also occasionally violates what I wrote earlier about the lack of session, hence I will conveniently avoid giving examples of it ![]()

Libraries and Middleware

At this point you have a concrete idea of how passive and active protocols work, which is what I was going for with this post. Before I let the topic go, though, I’d like to leverage the fact that you have the topic fresh in your mind to highlight some interesting facts about how you go about using those protocols when developing and connect some dots for you.

First of all: in most of the post I referred to the app as the entity responsible for triggering sign ins, verifying tokens, dropping cookies and the like. Although that is certainly a possible approach, we all know that it’s not how it goes down in practice. Protocol enforcement has to take place before your app logic has a chance of kicking in, and it constitutes of a nicely self-contained chuck of boilerplate logic – hence it is a perfect candidate for being externalized in the infrastructure, outside of your application. Besides: you don’t want to have security-dense code every single time you need to protect a resource, and have it intertwined with your app code.

There are multiple places where the authentication protocol logic can live: the hosting layer, the web server layer and the programming framework are all approaches in common use today.

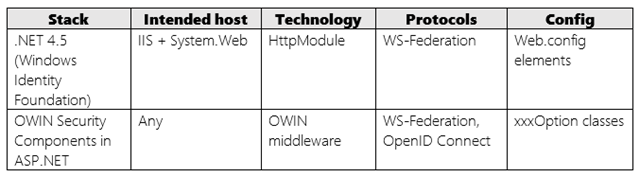

Stacks for Passive Protocols

Examples of passive protocols implementations on the .NET platform are the claims support in .NET 4.5.x (the classes coming from Windows Identity Foundation) and the OWIN security components in ASP.NET. Check out the summary table below:

Both are technologies designed to live in front of your app and perform their function before your code is invoked. Both require you to specify the coordinates that – now you know – are necessary for driving the protocol’s exchanges: the IP you want to work with, the identifier of your app in the transaction, occasionally the URL at which tokens should be returned, token validation coordinates, and so on.

The main differences between the two stacks are a function of when the two libraries were conceived. WIF came out at a time in which identity protocols were still the domain of administrators, hence the way in which you specify the coordinates the protocol needs is verbose and fully contained in the web.config (to make it possible to change it without touching the code proper). You can scan the web.config of an app configured to use WIF and find explicit entries for literally all the color coded coordinates discussed above. Also, at the time everything ran on IIS and System.Web – hence, the technology for intercepting requests was fully based on the associated extensibility model.

The new OWIN security components for ASP.NET have been conceived in a world where IPs routinely publish machine readable documents (“metadata documents”) listing many of the coordinates required for driving protocol transactions – hence configuring one app can be greatly simplified by referring to such documents rather than listing each coordinate explicitly. As the claims based identity protocols became mainstream for developers, the web.config centric model ceased to be necessary. Finally, today’s high density services and in general a desire for improved portability and host-independence led to the use of OWIN, a processing pipeline that can be targeted without requiring any assumption about where an app is ultimately hosted.

Despite of all those differences, the goals and overall structure of the two stacks remain the same, implementing a passive sign in protocol: intercepting unauthenticated requests, issuing sign in challenges toward the intended IP, retrieving tokens from messages and validating – all this while requiring minimally invasive configuration and impact on your own app.

Stacks for Active Protocols

If you exclude the token acquisition part, as we did in this post, implementing active protocols is easier. Or I should say, the protocol part itself is easy: it boils down to locating the token in the request, determining its type for the protocols allowing for more than one, and feed it to your validation logic.

The token validation can be arbitrarily complex: for example, proof of possession tokens in formats like SAML, which require canonicalization, is not something you’d want to implement from scratch. However, those scenarios are less and less common – today the rising currency is bitco… ops, bearer tokens, which are usually dramatically easier to handle. Let’s focus on those.

The OAuth2 bearer token usage spec does not mandate any specific token format – that said, the most commonly used format for scenarios where boundaries are crossed (see here) is JWT. That also happens to be the format used in OpenID Connect and pretty much everywhere in Azure AD.

Say that you want to secure a web API as described by the OAuth2 bearer token usage spec, and that you expect JWT as the token format. What are your implementation options today on the .NET stack? Mainly two:

- Use the OWIN security components for ASP.NET. There are some middleware components that, when included in your pipeline, will take care of finding the token in messages, validate it and add the caller as the user in the current context. As there are less moving parts than the passive case, the configuration is also simpler. Note that this approach will also ensure that your settings are fresh, given that they are obtained from the IPs metadata at every app’s restart.

- Implement the protocol yourself. Given that it is not that hard to interject logic that intercepts bearer tokens at every call, it is feasible to write your own logic that does so leveraging the platform’s extensibility points (for example a DelegatingHandler for ASP.NET web API). The token validation in itself is less fun, but we do provide a class (the JWT token handler) that wraps that exact functionality and is pretty easy to use – see this sample to observe the approach in action.

Of course this is not nearly as easy to use as the OWIN components, for starters you have to feed to the handler in fine details all validation coordinates and keep them fresh, but it is an option if for some reason you can’t use OWIN or you need more control.

Wrap

Getting from Paris to Seattle takes quite a long time, and the post followed suit. If you had the perseverance of reading all the way to here, congratulations! You now have a better idea of how passive and active protocols works, and how today’s technology implements those. This should empower you to grasp all sorts of new insights, such as why it is not straightforward to secure both Web API and MVC UX within the same project: when you receive an unauthenticated GET of a given resource, how can you decide if you should treat that as a passive request (“build that sign in message! Return a 302!”) or an active one (“What, no token? So no access to this web API, you fool! 401!”). On this specific problem, the good news is that the OWIN security components for ASP.NET make it pretty easy to handle that exact scenario: and now that you grok all this, I’ll tell you how to do it in the next post ![]()

God bless the intercontinental flights!

Thank you for such an interesting post, it has clarified a lot of concepts to me, which I was missing for long.

I can see crearly now… 😉

Thanks again!